Cifar 10 Demo V3

Using Keras’ Data Augmentation

Step 1: Organize Imports

from keras import backend as K

from keras.datasets import cifar10

from keras.utils import np_utils

from keras.models import Sequential

from keras.layers.core import Dense, Dropout, Activation, Flatten

from keras.layers.convolutional import Conv2D, MaxPooling2D

from keras.optimizers import SGD, Adam, RMSprop

from keras.preprocessing.image import ImageDataGenerator

import matplotlib.pyplot as plt

%matplotlib inline

Using TensorFlow backend.

Step 2: Set the constants </br>

CIFAR_10 is a set of 60K images 32x32 pixels on 3 channels

IMG_CHANNELS = 3

IMG_ROWS = 32

IMG_COLS = 32

INPUT_SHAPE = (IMG_ROWS, IMG_COLS, IMG_CHANNELS) # channels last

BATCH_SIZE = 128

NB_EPOCH = 40

NB_CLASSES = 10

VERBOSE = 1

VALIDATION_SPLIT = 0.2

OPTIM = RMSprop()

Step 3: Load dataset

(X_train, y_train), (X_test, y_test) = cifar10.load_data()

print('X_train shape:', X_train.shape)

print(X_train.shape[0], 'train samples')

print(X_test.shape[0], 'test samples')

X_train shape: (50000, 32, 32, 3)

50000 train samples

10000 test samples

Step 4: Convert to categorical (OHE)

Y_train = np_utils.to_categorical(y_train, NB_CLASSES)

Y_test = np_utils.to_categorical(y_test, NB_CLASSES)

Step 5: Convert to float and normalize

X_train = X_train.astype('float32')

X_test = X_test.astype('float32')

X_train /= 255

X_test /= 255

Step 6: Define the ConvNet model v2

class MyCNN:

@staticmethod

def build(input_shape, classes):

model = Sequential()

model.add(Conv2D(32, (3, 3), padding='same', input_shape=input_shape))

model.add(Activation('relu'))

model.add(Conv2D(32, (3, 3), padding='same'))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.25))

model.add(Conv2D(64, (3, 3), padding='same'))

model.add(Activation('relu'))

model.add(Conv2D(64, (3, 3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.25))

model.add(Flatten())

model.add(Dense(512))

model.add(Activation('relu'))

model.add(Dropout(0.5))

model.add(Dense(classes))

model.add(Activation('softmax'))

model.summary()

return model

Step 7: Define the ImageDataGenerator object

datagen = ImageDataGenerator(

rotation_range=40, # 0 to 180

width_shift_range=0.2, # horizontal-translation

height_shift_range=0.2, # vertical-translation

zoom_range=0.2, # random zoom

horizontal_flip=True,

fill_mode='nearest') # filling in pixels strategy

Step 8: Train the model

model = MyCNN.build(input_shape=INPUT_SHAPE, classes=NB_CLASSES)

model.compile(loss='categorical_crossentropy', optimizer=OPTIM,

metrics=['accuracy'])

history = model.fit_generator(datagen.flow(X_train, Y_train,

batch_size=BATCH_SIZE), samples_per_epoch=X_train.shape[0],

epochs=NB_EPOCH, verbose=VERBOSE)

# history = model.fit(X_train, Y_train, batch_size=BATCH_SIZE,

# epochs=NB_EPOCH, validation_split=VALIDATION_SPLIT,

# verbose=VERBOSE)

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_1 (Conv2D) (None, 32, 32, 32) 896

_________________________________________________________________

activation_1 (Activation) (None, 32, 32, 32) 0

_________________________________________________________________

conv2d_2 (Conv2D) (None, 32, 32, 32) 9248

_________________________________________________________________

activation_2 (Activation) (None, 32, 32, 32) 0

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 16, 16, 32) 0

_________________________________________________________________

dropout_1 (Dropout) (None, 16, 16, 32) 0

_________________________________________________________________

conv2d_3 (Conv2D) (None, 16, 16, 64) 18496

_________________________________________________________________

activation_3 (Activation) (None, 16, 16, 64) 0

_________________________________________________________________

conv2d_4 (Conv2D) (None, 14, 14, 64) 36928

_________________________________________________________________

activation_4 (Activation) (None, 14, 14, 64) 0

_________________________________________________________________

max_pooling2d_2 (MaxPooling2 (None, 7, 7, 64) 0

_________________________________________________________________

dropout_2 (Dropout) (None, 7, 7, 64) 0

_________________________________________________________________

flatten_1 (Flatten) (None, 3136) 0

_________________________________________________________________

dense_1 (Dense) (None, 512) 1606144

_________________________________________________________________

activation_5 (Activation) (None, 512) 0

_________________________________________________________________

dropout_3 (Dropout) (None, 512) 0

_________________________________________________________________

dense_2 (Dense) (None, 10) 5130

_________________________________________________________________

activation_6 (Activation) (None, 10) 0

=================================================================

Total params: 1,676,842

Trainable params: 1,676,842

Non-trainable params: 0

_________________________________________________________________

C:\Program Files\Anaconda3\envs\tensorflow-gpu\lib\site-packages\ipykernel_launcher.py:7: UserWarning: Update your `fit_generator` call to the Keras 2 API: `fit_generator(<keras.pre..., verbose=1, epochs=40, steps_per_epoch=390)`

import sys

Epoch 1/40

390/390 [==============================] - 20s - loss: 1.9263 - acc: 0.2967

Epoch 2/40

390/390 [==============================] - 18s - loss: 1.6401 - acc: 0.4045

Epoch 3/40

390/390 [==============================] - 18s - loss: 1.5116 - acc: 0.4560

Epoch 4/40

390/390 [==============================] - 18s - loss: 1.4326 - acc: 0.4857

Epoch 5/40

390/390 [==============================] - 18s - loss: 1.3798 - acc: 0.5062

Epoch 6/40

390/390 [==============================] - 18s - loss: 1.3362 - acc: 0.5224

Epoch 7/40

390/390 [==============================] - 18s - loss: 1.3083 - acc: 0.5337

Epoch 8/40

390/390 [==============================] - 18s - loss: 1.2809 - acc: 0.5448

Epoch 9/40

390/390 [==============================] - 18s - loss: 1.2645 - acc: 0.5528

Epoch 10/40

390/390 [==============================] - 18s - loss: 1.2382 - acc: 0.5611 - ETA: 0s - loss: 1.2386 - acc: 0.560

Epoch 11/40

390/390 [==============================] - 18s - loss: 1.2294 - acc: 0.5666

Epoch 12/40

390/390 [==============================] - 18s - loss: 1.2044 - acc: 0.5746

Epoch 13/40

390/390 [==============================] - 18s - loss: 1.2055 - acc: 0.5782

Epoch 14/40

390/390 [==============================] - 18s - loss: 1.1971 - acc: 0.5817

Epoch 15/40

390/390 [==============================] - 18s - loss: 1.1826 - acc: 0.5878

Epoch 16/40

390/390 [==============================] - 18s - loss: 1.1897 - acc: 0.5834

Epoch 17/40

390/390 [==============================] - 18s - loss: 1.1859 - acc: 0.5869

Epoch 18/40

390/390 [==============================] - 19s - loss: 1.1820 - acc: 0.5907

Epoch 19/40

390/390 [==============================] - 21s - loss: 1.1765 - acc: 0.5912

Epoch 20/40

390/390 [==============================] - 19s - loss: 1.1722 - acc: 0.5939

Epoch 21/40

390/390 [==============================] - 19s - loss: 1.1739 - acc: 0.5939

Epoch 22/40

390/390 [==============================] - 18s - loss: 1.1703 - acc: 0.5971

Epoch 23/40

390/390 [==============================] - 18s - loss: 1.1745 - acc: 0.5946

Epoch 24/40

390/390 [==============================] - 18s - loss: 1.1741 - acc: 0.5926

Epoch 25/40

390/390 [==============================] - 19s - loss: 1.1832 - acc: 0.5934

Epoch 26/40

390/390 [==============================] - 115s - loss: 1.1748 - acc: 0.5942

Epoch 27/40

390/390 [==============================] - 136s - loss: 1.1777 - acc: 0.5935

Epoch 28/40

390/390 [==============================] - 136s - loss: 1.1800 - acc: 0.5936

Epoch 29/40

390/390 [==============================] - 136s - loss: 1.1808 - acc: 0.5955

Epoch 30/40

390/390 [==============================] - 136s - loss: 1.1860 - acc: 0.5924

Epoch 31/40

390/390 [==============================] - 136s - loss: 1.1840 - acc: 0.5960

Epoch 32/40

390/390 [==============================] - 137s - loss: 1.1949 - acc: 0.5903

Epoch 33/40

390/390 [==============================] - 137s - loss: 1.1926 - acc: 0.5893

Epoch 34/40

390/390 [==============================] - 142s - loss: 1.1997 - acc: 0.5900

Epoch 35/40

390/390 [==============================] - 140s - loss: 1.1970 - acc: 0.5910

Epoch 36/40

390/390 [==============================] - 139s - loss: 1.2093 - acc: 0.5875

Epoch 37/40

390/390 [==============================] - 140s - loss: 1.2162 - acc: 0.5863

Epoch 38/40

390/390 [==============================] - 140s - loss: 1.2040 - acc: 0.5890

Epoch 39/40

390/390 [==============================] - 136s - loss: 1.2210 - acc: 0.5830

Epoch 40/40

390/390 [==============================] - 138s - loss: 1.2204 - acc: 0.5847

Step 9: Test the model

score = model.evaluate(X_test, Y_test,

batch_size=BATCH_SIZE, verbose=VERBOSE)

print("\nTest score:", score[0])

print('Test accuracy:', score[1])

10000/10000 [==============================] - 5s

Test score: 1.06622838154

Test accuracy: 0.6374

Step 10: Save the model

model_json = model.to_json()

open('cifar10_architecture.json', 'w').write(model_json)

model.save_weights('cifar10_weights.h5', overwrite=True)

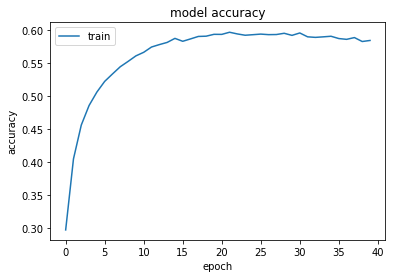

Step 11: Plot the accuracy

print(history.history.keys())

plt.plot(history.history['acc'])

# plt.plot(history.history['val_acc'])

plt.title('model accuracy')

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.legend(['train'], loc='upper left')

plt.show()

dict_keys(['acc', 'loss'])

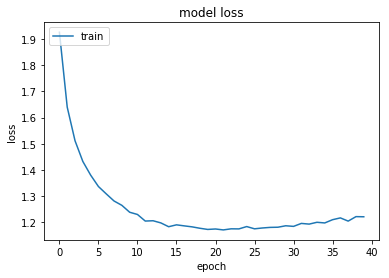

Step 13: Plot loss history

plt.plot(history.history['loss'])

#plt.plot(history.history['val_loss'])

plt.title('model loss')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(['train'], loc='upper left')

plt.show()

Step 13: Test on random images

import numpy as np

disp_images = []

for i in np.random.choice(np.arange(0, len(Y_test)), size=(10, )):

probs = model.predict(X_test[np.newaxis, i])

print('Probability: %s' % probs)

prediction = probs.argmax(axis=1)

print('Prediction: %s' % probs[0][prediction])

image = (X_test[i] * 255).astype("uint8")

disp_images.append(image)

print("[INFO] Predicted: {}, Actual: {}".format(prediction[0], np.argmax(Y_test[i])))

print('\n')

fig = plt.figure(figsize= (20,50))

for k in range(10):

plt.subplot(1, 10, k+1)

plt.gca().axes.get_yaxis().set_visible(False)

plt.gca().axes.get_xaxis().set_visible(False)

plt.imshow(disp_images[k])

Probability: [[ 7.19055301e-04 1.20621669e-04 3.07680629e-02 3.85810345e-01

8.80943611e-02 3.63511145e-01 1.14577323e-01 1.00582978e-02

5.96198253e-03 3.78655794e-04]]

Prediction: [ 0.38581035]

[INFO] Predicted: 3, Actual: 8

Probability: [[ 3.54413316e-03 1.01541495e-03 1.33760483e-03 2.29969062e-03

4.13530594e-04 1.13673590e-03 3.10226751e-04 2.86917388e-03

9.71093893e-01 1.59796029e-02]]

Prediction: [ 0.97109389]

[INFO] Predicted: 8, Actual: 8

Probability: [[ 1.01875899e-04 9.96330891e-06 5.78770578e-01 4.01648320e-02

3.48510444e-02 6.18964657e-02 2.55936652e-01 2.81523224e-02

4.77184112e-05 6.86029307e-05]]

Prediction: [ 0.57877058]

[INFO] Predicted: 2, Actual: 2

Probability: [[ 1.50341517e-10 2.65657049e-12 7.01352803e-07 6.25328114e-03

3.12692912e-08 9.93741572e-01 1.61011426e-09 4.39857695e-06

1.84451190e-10 8.35026326e-10]]

Prediction: [ 0.99374157]

[INFO] Predicted: 5, Actual: 5

Probability: [[ 1.26958417e-04 1.64489775e-05 5.77923085e-04 1.66505590e-01

1.35281167e-04 8.30997884e-01 9.93422815e-04 5.65124734e-04

2.37338318e-05 5.75101221e-05]]

Prediction: [ 0.83099788]

[INFO] Predicted: 5, Actual: 5

Probability: [[ 7.95807937e-05 2.71133278e-02 1.41070245e-07 3.07860432e-06

1.12356258e-08 6.93813547e-07 7.61351316e-07 6.59241960e-06

6.78489596e-05 9.72728014e-01]]

Prediction: [ 0.97272801]

[INFO] Predicted: 9, Actual: 9

Probability: [[ 0.45835289 0.0992886 0.05374259 0.00768392 0.00967235 0.01698391

0.01483182 0.0547625 0.02386722 0.26081428]]

Prediction: [ 0.45835289]

[INFO] Predicted: 0, Actual: 1

Probability: [[ 0.01203726 0.03811758 0.56271601 0.06542783 0.04211888 0.06809958

0.0810151 0.07775066 0.01009418 0.04262282]]

Prediction: [ 0.56271601]

[INFO] Predicted: 2, Actual: 2

Probability: [[ 9.01278341e-08 4.38804122e-08 5.33129601e-03 3.90676595e-03

8.53685662e-03 1.37622282e-03 9.80831802e-01 1.58587718e-05

9.04132662e-07 1.28818186e-07]]

Prediction: [ 0.9808318]

[INFO] Predicted: 6, Actual: 6

Probability: [[ 1.97944191e-05 6.17762344e-05 4.49966080e-03 2.19393641e-01

1.55775447e-03 7.14188814e-01 3.18020029e-04 5.76043911e-02

7.38053350e-04 1.61815935e-03]]

Prediction: [ 0.71418881]

[INFO] Predicted: 5, Actual: 5

-mkc

Written on September 30, 2017