Cifar 10 Demo V4

Using Keras’ Data Augmentation

Step 1: Organize Imports

from keras import backend as K

from keras.datasets import cifar10

from keras.utils import np_utils

from keras.models import Sequential

from keras.layers.core import Dense, Dropout, Activation, Flatten

from keras.layers.convolutional import Conv2D, MaxPooling2D

from keras.optimizers import SGD, Adam, RMSprop

from keras.preprocessing.image import ImageDataGenerator

import matplotlib.pyplot as plt

%matplotlib inline

Using TensorFlow backend.

Step 2: Set the constants </br>

CIFAR_10 is a set of 60K images 32x32 pixels on 3 channels

IMG_CHANNELS = 3

IMG_ROWS = 32

IMG_COLS = 32

INPUT_SHAPE = (IMG_ROWS, IMG_COLS, IMG_CHANNELS) # channels last

BATCH_SIZE = 128

NB_EPOCH = 60

NB_CLASSES = 10

VERBOSE = 1

VALIDATION_SPLIT = 0.2

OPTIM = Adam()

Step 3: Load dataset

(X_train, y_train), (X_test, y_test) = cifar10.load_data()

print('X_train shape:', X_train.shape)

print(X_train.shape[0], 'train samples')

print(X_test.shape[0], 'test samples')

X_train shape: (50000, 32, 32, 3)

50000 train samples

10000 test samples

Step 4: Convert to categorical (OHE)

Y_train = np_utils.to_categorical(y_train, NB_CLASSES)

Y_test = np_utils.to_categorical(y_test, NB_CLASSES)

Step 5: Convert to float and normalize

X_train = X_train.astype('float32')

X_test = X_test.astype('float32')

X_train /= 255

X_test /= 255

Step 6: Define the ConvNet model v2

class MyCNN:

@staticmethod

def build(input_shape, classes):

model = Sequential()

model.add(Conv2D(32, (3, 3), padding='same', input_shape=input_shape))

model.add(Activation('relu'))

model.add(Conv2D(32, (3, 3), padding='same'))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.25))

model.add(Conv2D(64, (3, 3), padding='same'))

model.add(Activation('relu'))

model.add(Conv2D(64, (3, 3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.25))

model.add(Flatten())

model.add(Dense(512))

model.add(Activation('relu'))

model.add(Dropout(0.5))

model.add(Dense(classes))

model.add(Activation('softmax'))

model.summary()

return model

Step 7: Define the ImageDataGenerator object

datagen = ImageDataGenerator(

rotation_range=20, # 0 to 180

width_shift_range=0.2, # horizontal-translation

height_shift_range=0.2, # vertical-translation

zoom_range=0.1, # random zoom

horizontal_flip=True,

fill_mode='nearest') # filling in pixels strategy

Step 8: Train the model

model = MyCNN.build(input_shape=INPUT_SHAPE, classes=NB_CLASSES)

model.compile(loss='categorical_crossentropy', optimizer=OPTIM, metrics=['accuracy'])

history = model.fit_generator(datagen.flow(X_train, Y_train,

batch_size=BATCH_SIZE), samples_per_epoch=X_train.shape[0],

epochs=NB_EPOCH, verbose=VERBOSE)

# history = model.fit(X_train, Y_train, batch_size=BATCH_SIZE,

# epochs=NB_EPOCH, validation_split=VALIDATION_SPLIT,

# verbose=VERBOSE)

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_13 (Conv2D) (None, 32, 32, 32) 896

_________________________________________________________________

activation_19 (Activation) (None, 32, 32, 32) 0

_________________________________________________________________

conv2d_14 (Conv2D) (None, 32, 32, 32) 9248

_________________________________________________________________

activation_20 (Activation) (None, 32, 32, 32) 0

_________________________________________________________________

max_pooling2d_7 (MaxPooling2 (None, 16, 16, 32) 0

_________________________________________________________________

dropout_10 (Dropout) (None, 16, 16, 32) 0

_________________________________________________________________

conv2d_15 (Conv2D) (None, 16, 16, 64) 18496

_________________________________________________________________

activation_21 (Activation) (None, 16, 16, 64) 0

_________________________________________________________________

conv2d_16 (Conv2D) (None, 14, 14, 64) 36928

_________________________________________________________________

activation_22 (Activation) (None, 14, 14, 64) 0

_________________________________________________________________

max_pooling2d_8 (MaxPooling2 (None, 7, 7, 64) 0

_________________________________________________________________

dropout_11 (Dropout) (None, 7, 7, 64) 0

_________________________________________________________________

flatten_4 (Flatten) (None, 3136) 0

_________________________________________________________________

dense_7 (Dense) (None, 512) 1606144

_________________________________________________________________

activation_23 (Activation) (None, 512) 0

_________________________________________________________________

dropout_12 (Dropout) (None, 512) 0

_________________________________________________________________

dense_8 (Dense) (None, 10) 5130

_________________________________________________________________

activation_24 (Activation) (None, 10) 0

=================================================================

Total params: 1,676,842

Trainable params: 1,676,842

Non-trainable params: 0

_________________________________________________________________

C:\Program Files\Anaconda3\envs\tensorflow-gpu\lib\site-packages\ipykernel_launcher.py:6: UserWarning: Update your `fit_generator` call to the Keras 2 API: `fit_generator(<keras.pre..., verbose=1, epochs=60, steps_per_epoch=390)`

Epoch 1/60

390/390 [==============================] - 19s - loss: 1.7950 - acc: 0.3352

Epoch 2/60

390/390 [==============================] - 18s - loss: 1.4720 - acc: 0.4621

Epoch 3/60

390/390 [==============================] - 18s - loss: 1.3347 - acc: 0.5174

Epoch 4/60

390/390 [==============================] - 18s - loss: 1.2582 - acc: 0.5486

Epoch 5/60

390/390 [==============================] - 18s - loss: 1.2003 - acc: 0.5687

Epoch 6/60

390/390 [==============================] - 18s - loss: 1.1632 - acc: 0.5835

Epoch 7/60

390/390 [==============================] - 18s - loss: 1.1251 - acc: 0.5986

Epoch 8/60

390/390 [==============================] - 18s - loss: 1.0898 - acc: 0.6100

Epoch 9/60

390/390 [==============================] - 18s - loss: 1.0718 - acc: 0.6197

Epoch 10/60

390/390 [==============================] - 18s - loss: 1.0470 - acc: 0.6243

Epoch 11/60

390/390 [==============================] - 18s - loss: 1.0249 - acc: 0.6359

Epoch 12/60

390/390 [==============================] - 18s - loss: 1.0060 - acc: 0.6451

Epoch 13/60

390/390 [==============================] - 18s - loss: 0.9817 - acc: 0.6541

Epoch 14/60

390/390 [==============================] - 18s - loss: 0.9782 - acc: 0.6563

Epoch 15/60

390/390 [==============================] - 18s - loss: 0.9651 - acc: 0.6631

Epoch 16/60

390/390 [==============================] - 18s - loss: 0.9511 - acc: 0.6642

Epoch 17/60

390/390 [==============================] - 18s - loss: 0.9533 - acc: 0.6646

Epoch 18/60

390/390 [==============================] - 18s - loss: 0.9387 - acc: 0.6717

Epoch 19/60

390/390 [==============================] - 18s - loss: 0.9345 - acc: 0.6723

Epoch 20/60

390/390 [==============================] - 19s - loss: 0.9145 - acc: 0.6800

Epoch 21/60

390/390 [==============================] - 18s - loss: 0.9095 - acc: 0.6810

Epoch 22/60

390/390 [==============================] - 18s - loss: 0.9140 - acc: 0.6780

Epoch 23/60

390/390 [==============================] - 19s - loss: 0.9036 - acc: 0.6834

Epoch 24/60

390/390 [==============================] - 19s - loss: 0.8934 - acc: 0.6869

Epoch 25/60

390/390 [==============================] - 18s - loss: 0.8955 - acc: 0.6862

Epoch 26/60

390/390 [==============================] - 19s - loss: 0.8769 - acc: 0.6911

Epoch 27/60

390/390 [==============================] - 18s - loss: 0.8745 - acc: 0.6928

Epoch 28/60

390/390 [==============================] - 18s - loss: 0.8702 - acc: 0.6973

Epoch 29/60

390/390 [==============================] - 18s - loss: 0.8774 - acc: 0.6954

Epoch 30/60

390/390 [==============================] - 18s - loss: 0.8674 - acc: 0.6961

Epoch 31/60

390/390 [==============================] - 18s - loss: 0.8589 - acc: 0.7001

Epoch 32/60

390/390 [==============================] - 19s - loss: 0.8506 - acc: 0.6992

Epoch 33/60

390/390 [==============================] - 19s - loss: 0.8472 - acc: 0.7034

Epoch 34/60

390/390 [==============================] - 18s - loss: 0.8422 - acc: 0.7041

Epoch 35/60

390/390 [==============================] - 18s - loss: 0.8381 - acc: 0.7064

Epoch 36/60

390/390 [==============================] - 19s - loss: 0.8430 - acc: 0.7052

Epoch 37/60

390/390 [==============================] - 18s - loss: 0.8354 - acc: 0.7082

Epoch 38/60

390/390 [==============================] - 19s - loss: 0.8321 - acc: 0.7092

Epoch 39/60

390/390 [==============================] - 19s - loss: 0.8345 - acc: 0.7092

Epoch 40/60

390/390 [==============================] - 19s - loss: 0.8299 - acc: 0.7085

Epoch 41/60

390/390 [==============================] - 18s - loss: 0.8198 - acc: 0.7140

Epoch 42/60

390/390 [==============================] - 18s - loss: 0.8214 - acc: 0.7115

Epoch 43/60

390/390 [==============================] - 18s - loss: 0.8088 - acc: 0.7168

Epoch 44/60

390/390 [==============================] - 18s - loss: 0.8144 - acc: 0.7162

Epoch 45/60

390/390 [==============================] - 20s - loss: 0.8146 - acc: 0.7144

Epoch 46/60

390/390 [==============================] - 100s - loss: 0.8044 - acc: 0.7190

Epoch 47/60

390/390 [==============================] - 118s - loss: 0.8186 - acc: 0.7146

Epoch 48/60

390/390 [==============================] - 118s - loss: 0.8031 - acc: 0.7234

Epoch 49/60

390/390 [==============================] - 118s - loss: 0.8029 - acc: 0.7196

Epoch 50/60

390/390 [==============================] - 42s - loss: 0.8067 - acc: 0.7207

Epoch 51/60

390/390 [==============================] - 18s - loss: 0.8032 - acc: 0.7195

Epoch 52/60

390/390 [==============================] - 18s - loss: 0.7993 - acc: 0.7196

Epoch 53/60

390/390 [==============================] - 18s - loss: 0.7920 - acc: 0.7235

Epoch 54/60

390/390 [==============================] - 18s - loss: 0.7937 - acc: 0.7236

Epoch 55/60

390/390 [==============================] - 18s - loss: 0.7986 - acc: 0.7225

Epoch 56/60

390/390 [==============================] - 19s - loss: 0.7938 - acc: 0.7242

Epoch 57/60

390/390 [==============================] - 19s - loss: 0.7904 - acc: 0.7248

Epoch 58/60

390/390 [==============================] - 19s - loss: 0.7819 - acc: 0.7250

Epoch 59/60

390/390 [==============================] - 18s - loss: 0.7849 - acc: 0.7285

Epoch 60/60

390/390 [==============================] - 19s - loss: 0.7877 - acc: 0.7258

Step 9: Test the model

score = model.evaluate(X_test, Y_test,

batch_size=BATCH_SIZE, verbose=VERBOSE)

print("\nTest score:", score[0])

print('Test accuracy:', score[1])

9984/10000 [============================>.] - ETA: 0s

Test score: 0.563428911638

Test accuracy: 0.8035

Step 10: Save the model

model_json = model.to_json()

open('cifar10_architecture.json', 'w').write(model_json)

model.save_weights('cifar10_weights.h5', overwrite=True)

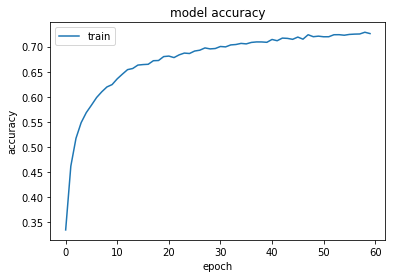

Step 11: Plot the accuracy

print(history.history.keys())

plt.plot(history.history['acc'])

# plt.plot(history.history['val_acc'])

plt.title('model accuracy')

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.legend(['train'], loc='upper left')

plt.show()

dict_keys(['acc', 'loss'])

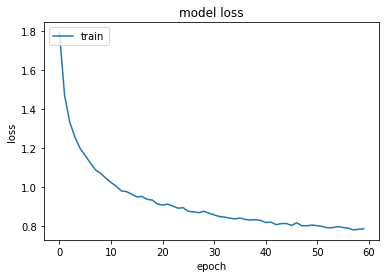

Step 13: Plot loss history

plt.plot(history.history['loss'])

#plt.plot(history.history['val_loss'])

plt.title('model loss')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(['train'], loc='upper left')

plt.show()

Step 13: Test on random images

import numpy as np

disp_images = []

for i in np.random.choice(np.arange(0, len(Y_test)), size=(10, )):

probs = model.predict(X_test[np.newaxis, i])

print('Probability: %s' % probs)

prediction = probs.argmax(axis=1)

print('Prediction: %s' % probs[0][prediction])

image = (X_test[i] * 255).astype("uint8")

disp_images.append(image)

print("[INFO] Predicted: {}, Actual: {}".format(prediction[0], np.argmax(Y_test[i])))

print('\n')

fig = plt.figure(figsize= (20,50))

for k in range(10):

plt.subplot(1, 10, k+1)

plt.gca().axes.get_yaxis().set_visible(False)

plt.gca().axes.get_xaxis().set_visible(False)

plt.imshow(disp_images[k])

Probability: [[ 1.62547285e-11 9.99998927e-01 6.70743241e-15 8.62841767e-14

1.63081470e-18 4.42548298e-16 5.23440781e-14 8.07765559e-17

8.47502537e-12 1.09237351e-06]]

Prediction: [ 0.99999893]

[INFO] Predicted: 1, Actual: 1

Probability: [[ 0.01599916 0.16678077 0.00981532 0.00189127 0.00097248 0.00574225

0.00214783 0.00203614 0.77152532 0.02308941]]

Prediction: [ 0.77152532]

[INFO] Predicted: 8, Actual: 8

Probability: [[ 5.51527459e-03 9.31061804e-01 1.63177028e-03 1.02918840e-03

2.74870345e-05 6.37103745e-04 1.13927340e-03 3.45696550e-04

4.19025756e-02 1.67095754e-02]]

Prediction: [ 0.9310618]

[INFO] Predicted: 1, Actual: 1

Probability: [[ 2.74059270e-03 6.53386891e-01 3.70431371e-05 1.17003940e-04

2.07870562e-06 1.24069447e-06 8.39660061e-05 1.34359232e-06

3.10208261e-01 3.34216133e-02]]

Prediction: [ 0.65338689]

[INFO] Predicted: 1, Actual: 8

Probability: [[ 0.12598664 0.00326033 0.00601068 0.01605523 0.01369602 0.00445945

0.00070886 0.01564486 0.12098835 0.69318962]]

Prediction: [ 0.69318962]

[INFO] Predicted: 9, Actual: 9

Probability: [[ 1.39591630e-05 9.49036405e-09 3.98821523e-03 6.68474913e-01

1.17446929e-04 3.05832131e-03 3.24338466e-01 7.68542577e-06

9.44786734e-07 8.33802787e-08]]

Prediction: [ 0.66847491]

[INFO] Predicted: 3, Actual: 3

Probability: [[ 6.13338547e-04 5.20564172e-05 3.06526315e-03 4.07787971e-03

1.46034057e-03 9.47108306e-03 3.58816091e-04 9.63566661e-01

1.70014817e-02 3.33105767e-04]]

Prediction: [ 0.96356666]

[INFO] Predicted: 7, Actual: 7

Probability: [[ 0.19938287 0.0183992 0.00207316 0.0084355 0.00370245 0.00194393

0.00068638 0.00841228 0.21520843 0.54175574]]

Prediction: [ 0.54175574]

[INFO] Predicted: 9, Actual: 9

Probability: [[ 0.01651974 0.0289838 0.01687341 0.08580466 0.00210735 0.00135384

0.75394803 0.001259 0.0369886 0.05616162]]

Prediction: [ 0.75394803]

[INFO] Predicted: 6, Actual: 6

Probability: [[ 5.24855917e-04 1.51539311e-07 2.61628302e-04 3.44149594e-05

3.65500361e-01 5.03372692e-04 3.35481104e-07 6.33163214e-01

9.65809249e-07 1.06677771e-05]]

Prediction: [ 0.63316321]

[INFO] Predicted: 7, Actual: 4

-mkc

Written on September 30, 2017