Cifar 10 Demo V2

Step 1: Organize Imports

from keras.datasets import cifar10

from keras.utils import np_utils

from keras.models import Sequential

from keras.layers.core import Dense, Dropout, Activation, Flatten

from keras.layers.convolutional import Conv2D, MaxPooling2D

from keras.optimizers import SGD, Adam, RMSprop

import matplotlib.pyplot as plt

%matplotlib inline

Using TensorFlow backend.

Step 2: Set the constants </br>

CIFAR_10 is a set of 60K images 32x32 pixels on 3 channels

IMG_CHANNELS = 3

IMG_ROWS = 32

IMG_COLS = 32

BATCH_SIZE = 128

NB_EPOCH = 40

NB_CLASSES = 10

VERBOSE = 1

VALIDATION_SPLIT = 0.2

OPTIM = RMSprop()

Step 3: Load dataset

(X_train, y_train), (X_test, y_test) = cifar10.load_data()

print('X_train shape:', X_train.shape)

print(X_train.shape[0], 'train samples')

print(X_test.shape[0], 'test samples')

X_train shape: (50000, 32, 32, 3)

50000 train samples

10000 test samples

Step 4: Convert to categorical (OHE)

Y_train = np_utils.to_categorical(y_train, NB_CLASSES)

Y_test = np_utils.to_categorical(y_test, NB_CLASSES)

Step 5: Convert to float and normalize

X_train = X_train.astype('float32')

X_test = X_test.astype('float32')

X_train /= 255

X_test /= 255

Step 6: Define the ConvNet model v2

model = Sequential()

model.add(Conv2D(32, (3, 3), padding='same',

input_shape=(IMG_ROWS, IMG_COLS, IMG_CHANNELS)))

model.add(Activation('relu'))

model.add(Conv2D(32, (3, 3), padding='same'))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.25))

model.add(Conv2D(64, (3, 3), padding='same'))

model.add(Activation('relu'))

model.add(Conv2D(64, (3, 3))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.25))

model.add(Flatten())

model.add(Dense(512))

model.add(Activation('relu'))

model.add(Dropout(0.5))

model.add(Dense(NB_CLASSES))

model.add(Activation('softmax'))

model.summary()

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_1 (Conv2D) (None, 32, 32, 32) 896

_________________________________________________________________

activation_1 (Activation) (None, 32, 32, 32) 0

_________________________________________________________________

conv2d_2 (Conv2D) (None, 32, 32, 32) 9248

_________________________________________________________________

activation_2 (Activation) (None, 32, 32, 32) 0

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 16, 16, 32) 0

_________________________________________________________________

dropout_1 (Dropout) (None, 16, 16, 32) 0

_________________________________________________________________

conv2d_3 (Conv2D) (None, 16, 16, 64) 18496

_________________________________________________________________

activation_3 (Activation) (None, 16, 16, 64) 0

_________________________________________________________________

conv2d_4 (Conv2D) (None, 14, 14, 64) 36928

_________________________________________________________________

activation_4 (Activation) (None, 14, 14, 64) 0

_________________________________________________________________

max_pooling2d_2 (MaxPooling2 (None, 7, 7, 64) 0

_________________________________________________________________

dropout_2 (Dropout) (None, 7, 7, 64) 0

_________________________________________________________________

flatten_1 (Flatten) (None, 3136) 0

_________________________________________________________________

dense_1 (Dense) (None, 512) 1606144

_________________________________________________________________

activation_5 (Activation) (None, 512) 0

_________________________________________________________________

dropout_3 (Dropout) (None, 512) 0

_________________________________________________________________

dense_2 (Dense) (None, 10) 5130

_________________________________________________________________

activation_6 (Activation) (None, 10) 0

=================================================================

Total params: 1,676,842

Trainable params: 1,676,842

Non-trainable params: 0

_________________________________________________________________

C:\Program Files\Anaconda3\envs\tensorflow-gpu\lib\site-packages\ipykernel_launcher.py:11: UserWarning: Update your `Conv2D` call to the Keras 2 API: `Conv2D(64, (3, 3))`

# This is added back by InteractiveShellApp.init_path()

Step 7: Train the model

model.compile(loss='categorical_crossentropy', optimizer=OPTIM,

metrics=['accuracy'])

history = model.fit(X_train, Y_train, batch_size=BATCH_SIZE,

epochs=NB_EPOCH, validation_split=VALIDATION_SPLIT,

verbose=VERBOSE)

Train on 40000 samples, validate on 10000 samples

Epoch 1/40

40000/40000 [==============================] - 168s - loss: 1.8409 - acc: 0.3376 - val_loss: 1.4021 - val_acc: 0.5124

Epoch 2/40

40000/40000 [==============================] - 170s - loss: 1.3572 - acc: 0.5147 - val_loss: 1.4109 - val_acc: 0.4924

Epoch 3/40

40000/40000 [==============================] - 171s - loss: 1.1588 - acc: 0.5941 - val_loss: 1.1791 - val_acc: 0.5982

Epoch 4/40

40000/40000 [==============================] - 161s - loss: 1.0340 - acc: 0.6372 - val_loss: 0.9895 - val_acc: 0.6585

Epoch 5/40

40000/40000 [==============================] - 127s - loss: 0.9297 - acc: 0.6771 - val_loss: 0.8833 - val_acc: 0.6954

Epoch 6/40

40000/40000 [==============================] - 126s - loss: 0.8551 - acc: 0.6983 - val_loss: 0.7950 - val_acc: 0.7231

Epoch 7/40

40000/40000 [==============================] - 126s - loss: 0.7974 - acc: 0.7208 - val_loss: 0.9026 - val_acc: 0.6880

Epoch 8/40

40000/40000 [==============================] - 129s - loss: 0.7413 - acc: 0.7405 - val_loss: 0.7866 - val_acc: 0.7321

Epoch 9/40

40000/40000 [==============================] - 128s - loss: 0.7003 - acc: 0.7595 - val_loss: 0.7689 - val_acc: 0.7392

Epoch 10/40

40000/40000 [==============================] - 128s - loss: 0.6648 - acc: 0.7712 - val_loss: 0.6922 - val_acc: 0.7611

Epoch 11/40

40000/40000 [==============================] - 129s - loss: 0.6316 - acc: 0.7799 - val_loss: 0.7737 - val_acc: 0.7380

Epoch 12/40

40000/40000 [==============================] - 129s - loss: 0.6056 - acc: 0.7910 - val_loss: 0.7517 - val_acc: 0.7478

Epoch 13/40

40000/40000 [==============================] - 128s - loss: 0.5870 - acc: 0.7976 - val_loss: 0.6518 - val_acc: 0.7789

Epoch 14/40

40000/40000 [==============================] - 127s - loss: 0.5659 - acc: 0.8030 - val_loss: 0.7717 - val_acc: 0.7508

Epoch 15/40

40000/40000 [==============================] - 127s - loss: 0.5395 - acc: 0.8121 - val_loss: 0.6761 - val_acc: 0.7704

Epoch 16/40

40000/40000 [==============================] - 127s - loss: 0.5240 - acc: 0.8198 - val_loss: 0.7068 - val_acc: 0.7701

Epoch 17/40

40000/40000 [==============================] - 128s - loss: 0.5175 - acc: 0.8219 - val_loss: 0.6366 - val_acc: 0.7841

Epoch 18/40

40000/40000 [==============================] - 128s - loss: 0.4987 - acc: 0.8272 - val_loss: 0.7209 - val_acc: 0.7830

Epoch 19/40

40000/40000 [==============================] - 128s - loss: 0.4976 - acc: 0.8302 - val_loss: 0.6896 - val_acc: 0.7747

Epoch 20/40

40000/40000 [==============================] - 127s - loss: 0.4935 - acc: 0.8344 - val_loss: 0.7343 - val_acc: 0.7634

Epoch 21/40

40000/40000 [==============================] - 128s - loss: 0.4828 - acc: 0.8353 - val_loss: 0.6890 - val_acc: 0.7851

Epoch 22/40

40000/40000 [==============================] - 128s - loss: 0.4857 - acc: 0.8334 - val_loss: 0.7304 - val_acc: 0.7786

Epoch 23/40

40000/40000 [==============================] - 128s - loss: 0.4832 - acc: 0.8361 - val_loss: 0.6999 - val_acc: 0.7786

Epoch 24/40

40000/40000 [==============================] - 126s - loss: 0.4901 - acc: 0.8357 - val_loss: 0.6343 - val_acc: 0.7944

Epoch 25/40

40000/40000 [==============================] - 128s - loss: 0.4855 - acc: 0.8366 - val_loss: 0.7423 - val_acc: 0.7815

Epoch 26/40

40000/40000 [==============================] - 128s - loss: 0.4830 - acc: 0.8387 - val_loss: 0.7201 - val_acc: 0.7828

Epoch 27/40

40000/40000 [==============================] - 127s - loss: 0.4929 - acc: 0.8367 - val_loss: 0.6674 - val_acc: 0.7868

Epoch 28/40

40000/40000 [==============================] - 128s - loss: 0.4936 - acc: 0.8370 - val_loss: 0.6880 - val_acc: 0.7807

Epoch 29/40

40000/40000 [==============================] - 129s - loss: 0.4881 - acc: 0.8371 - val_loss: 0.7414 - val_acc: 0.7989

Epoch 30/40

40000/40000 [==============================] - 128s - loss: 0.4930 - acc: 0.8375 - val_loss: 0.7370 - val_acc: 0.7793

Epoch 31/40

40000/40000 [==============================] - 125s - loss: 0.4990 - acc: 0.8359 - val_loss: 0.7205 - val_acc: 0.7912

Epoch 32/40

40000/40000 [==============================] - 125s - loss: 0.4965 - acc: 0.8385 - val_loss: 0.7114 - val_acc: 0.7941

Epoch 33/40

40000/40000 [==============================] - 125s - loss: 0.5015 - acc: 0.8350 - val_loss: 0.6636 - val_acc: 0.7876

Epoch 34/40

40000/40000 [==============================] - 125s - loss: 0.5102 - acc: 0.8339 - val_loss: 0.7182 - val_acc: 0.7821

Epoch 35/40

40000/40000 [==============================] - 124s - loss: 0.5117 - acc: 0.8342 - val_loss: 0.9394 - val_acc: 0.7765

Epoch 36/40

40000/40000 [==============================] - 132s - loss: 0.5096 - acc: 0.8347 - val_loss: 0.7000 - val_acc: 0.7849

Epoch 37/40

40000/40000 [==============================] - 162s - loss: 0.5229 - acc: 0.8307 - val_loss: 0.7863 - val_acc: 0.7946

Epoch 38/40

40000/40000 [==============================] - 167s - loss: 0.5195 - acc: 0.8333 - val_loss: 0.7503 - val_acc: 0.7882

Epoch 39/40

40000/40000 [==============================] - 167s - loss: 0.5312 - acc: 0.8288 - val_loss: 0.7880 - val_acc: 0.7901

Epoch 40/40

40000/40000 [==============================] - 167s - loss: 0.5297 - acc: 0.8320 - val_loss: 0.8276 - val_acc: 0.7686

Step 8: Test the model

score = model.evaluate(X_test, Y_test,

batch_size=BATCH_SIZE, verbose=VERBOSE)

print("\nTest score:", score[0])

print('Test accuracy:', score[1])

9984/10000 [============================>.] - ETA: 0s

Test score: 0.832366293049

Test accuracy: 0.7694

Step 9: Save the model

model_json = model.to_json()

open('cifar10_architecture.json', 'w').write(model_json)

model.save_weights('cifar10_weights.h5', overwrite=True)

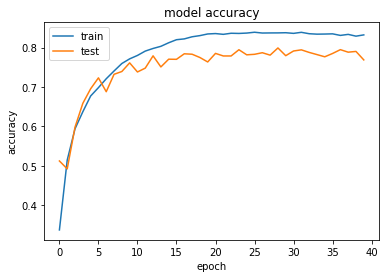

Step 10: Plot the accuracy

print(history.history.keys())

plt.plot(history.history['acc'])

plt.plot(history.history['val_acc'])

plt.title('model accuracy')

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.legend(['train', 'test'], loc='upper left')

plt.show()

dict_keys(['val_loss', 'val_acc', 'acc', 'loss'])

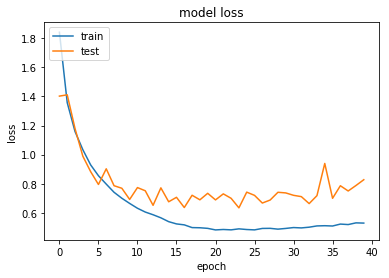

Step 11: Plot loss history

plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.title('model loss')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(['train', 'test'], loc='upper left')

plt.show()

Step 12: Test on random images

import numpy as np

disp_images = []

for i in np.random.choice(np.arange(0, len(Y_test)), size=(10, )):

probs = model.predict(X_test[np.newaxis, i])

print('Probability: %s' % probs)

prediction = probs.argmax(axis=1)

print('Prediction: %s' % probs[0][prediction])

image = (X_test[i] * 255).astype("uint8")

disp_images.append(image)

print("[INFO] Predicted: {}, Actual: {}".format(prediction[0], np.argmax(Y_test[i])))

print('\n')

fig = plt.figure(figsize= (20,50))

for k in range(10):

plt.subplot(1, 10, k+1)

plt.gca().axes.get_yaxis().set_visible(False)

plt.gca().axes.get_xaxis().set_visible(False)

plt.imshow(disp_images[k])

Probability: [[ 0.00823982 0.07492513 0.51655346 0.08641004 0.0733641 0.01088354

0.09417738 0.05394183 0.07353852 0.00796624]]

Prediction: [ 0.51655346]

[INFO] Predicted: 2, Actual: 2

Probability: [[ 2.81836464e-07 4.90996987e-04 1.12077259e-06 5.08155463e-05

3.15185412e-06 1.71205465e-05 9.99387860e-01 4.33975316e-07

1.02562787e-07 4.79543924e-05]]

Prediction: [ 0.99938786]

[INFO] Predicted: 6, Actual: 6

Probability: [[ 9.67237055e-01 1.24608061e-06 1.15771219e-03 1.19171469e-02

3.35618365e-03 1.62529796e-02 1.01091437e-05 5.46005431e-05

2.22641393e-06 1.06907719e-05]]

Prediction: [ 0.96723706]

[INFO] Predicted: 0, Actual: 0

Probability: [[ 2.57812007e-05 4.92997060e-07 9.79272842e-01 2.81754206e-03

5.42091019e-03 4.45879996e-05 1.46994833e-03 1.24730395e-05

1.08785005e-02 5.68296673e-05]]

Prediction: [ 0.97927284]

[INFO] Predicted: 2, Actual: 2

Probability: [[ 8.05308670e-03 2.07007647e-01 2.70814166e-06 1.98571115e-06

1.30638270e-07 1.65108546e-07 2.85984925e-06 2.01343303e-03

1.57999173e-02 7.67118096e-01]]

Prediction: [ 0.7671181]

[INFO] Predicted: 9, Actual: 1

Probability: [[ 9.55353852e-13 4.53155546e-14 1.19717825e-09 1.95391422e-05

7.50525899e-08 9.99933481e-01 1.76887713e-08 4.68365797e-05

8.24280401e-12 1.51494477e-11]]

Prediction: [ 0.99993348]

[INFO] Predicted: 5, Actual: 5

Probability: [[ 1.29795197e-04 6.80058702e-06 1.00467261e-03 1.44844994e-01

1.51061930e-03 8.39578390e-01 4.21333127e-03 8.70099198e-03

1.48134245e-06 8.91840045e-06]]

Prediction: [ 0.83957839]

[INFO] Predicted: 5, Actual: 5

Probability: [[ 3.35938181e-03 3.68716343e-07 8.58859241e-01 8.22437403e-04

1.28401816e-01 5.08039433e-04 7.75876129e-03 2.32035309e-04

5.76190214e-05 2.64674782e-07]]

Prediction: [ 0.85885924]

[INFO] Predicted: 2, Actual: 2

Probability: [[ 0.37388203 0.00958517 0.05369946 0.00352884 0.01653171 0.00115101

0.06050198 0.0005891 0.47242591 0.00810475]]

Prediction: [ 0.47242591]

[INFO] Predicted: 8, Actual: 0

Probability: [[ 4.87583862e-10 5.77620973e-08 3.60462014e-20 1.80649767e-20

1.69558036e-18 2.65745302e-21 4.81812745e-27 7.39529965e-12

5.07545835e-14 1.00000000e+00]]

Prediction: [ 1.]

[INFO] Predicted: 9, Actual: 9

-mkc

Written on September 28, 2017