Deep Learning Hello World Part5

Deep Learning Hello World! (LeNet-5)

Objective: To be able to implement LeNet-5 for MNIST Classification

Step 1: Taking care of the imports which includes numpy, datasets, models, layers, optimizers, and utils.

You will also be able to tell if your set-up is correct/complete.

from keras import backend as K

from keras.models import Sequential

from keras.models import model_from_json

from keras.layers.convolutional import Conv2D

from keras.layers.convolutional import MaxPooling2D

from keras.layers.core import Activation

from keras.layers.core import Flatten

from keras.layers.core import Dense

from keras.datasets import mnist

from keras.utils import np_utils

from keras.optimizers import Adam

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

from IPython.display import Image # for displaying images

from IPython.core.display import HTML

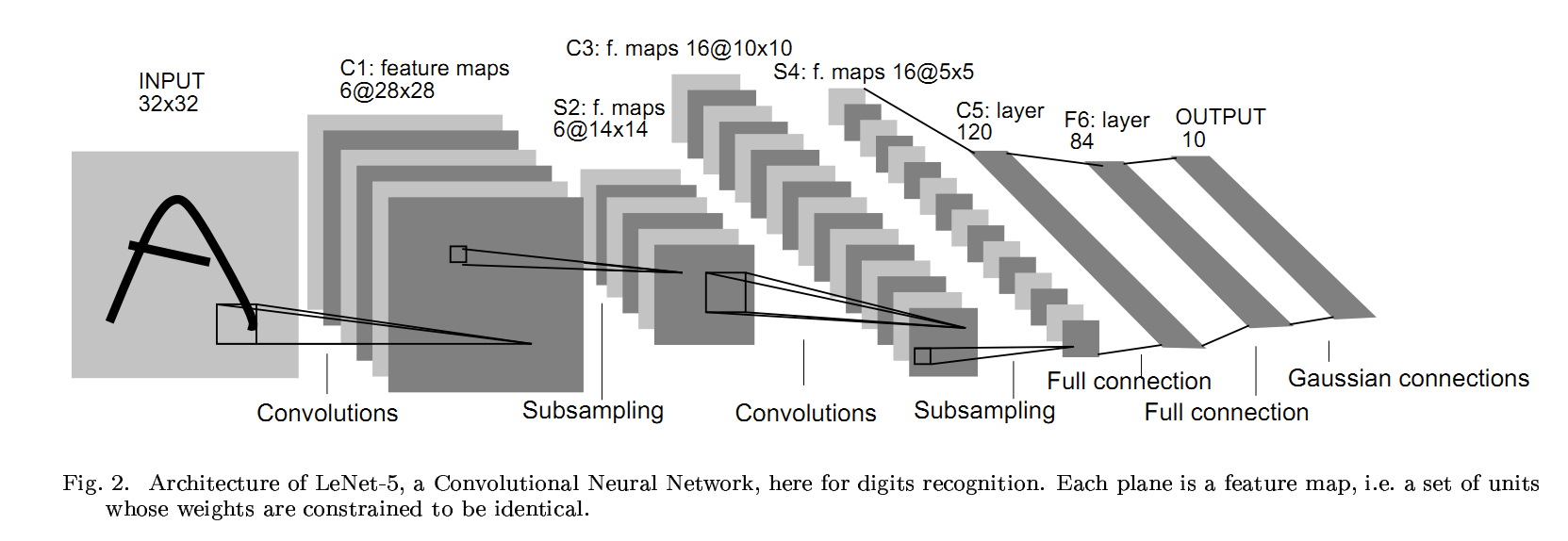

Step 2: Define LeNet-5 CNN model

Image(url= "https://world4jason.gitbooks.io/research-log/content/deepLearning/CNN/img/lenet.png")

class LeNet:

@staticmethod

def build(input_shape, classes):

model = Sequential()

# CONV => RELU => POOL

model.add(Conv2D(20, kernel_size=5, padding="same",

input_shape=input_shape))

model.add(Activation("relu"))

model.add(MaxPooling2D(pool_size=(2, 2), strides=(2, 2)))

# CONV => RELU => POOL

model.add(Conv2D(50, kernel_size=5, padding="same"))

model.add(Activation("relu"))

model.add(MaxPooling2D(pool_size=(2, 2), strides=(2, 2)))

# Flatten => RELU layers

model.add(Flatten())

model.add(Dense(500))

model.add(Activation("relu"))

# a softmax classifier

model.add(Dense(classes))

model.add(Activation("softmax"))

return model

Step 3: Set-up some constants to be utilized in the training/testing of the model <br>

NB_EPOCH = 250

BATCH_SIZE = 128

VERBOSE = 1

OPTIMIZER = Adam()

VALIDATION_SPLIT=0.2

IMG_ROWS, IMG_COLS = 28, 28

NB_CLASSES = 10

INPUT_SHAPE = (1, IMG_ROWS, IMG_COLS)

np.random.seed(1983) # for reproducibility

Step 4: Load the MNIST Dataset which are shuffled and split between train and test sets <br>

- X_train is 60000 rows of 28x28 values

- X_test is 10000 rows of 28x28 values

(X_train, y_train), (X_test, y_test) = mnist.load_data()

K.set_image_dim_ordering("th")

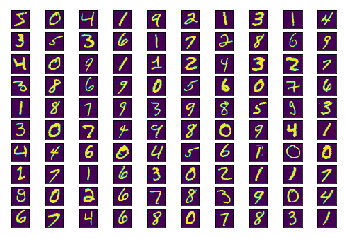

print("First 100 train images:")

for k in range(100):

plt.subplot(10, 10, k+1)

plt.gca().axes.get_yaxis().set_visible(False)

plt.gca().axes.get_xaxis().set_visible(False)

plt.imshow(X_train[k])

First 100 train images:

Step 5: Preprocess the input data by reshaping it, converting it to float, and normalizing it [0-1].

X_train = X_train.astype('float32')

X_test = X_test.astype('float32')

X_train /= 255

X_test /= 255

# we need a 60K x [1 x 28 x 28] shape as input to the CONVNET

X_train = X_train[:, np.newaxis, :, :]

X_test = X_test[:, np.newaxis, :, :]

print(X_train.shape, 'train samples')

print(X_test.shape, 'test samples')

(60000, 1, 28, 28) train samples

(10000, 1, 28, 28) test samples

Step 6: Convert class vectors to binary class matrices; One-Hot-Encoding (OHE)

Y_train = np_utils.to_categorical(y_train, NB_CLASSES)

Y_test = np_utils.to_categorical(y_test, NB_CLASSES)

Step 7: Create the model with 3 layers: Input:784 ==> Hidden:128 w/ dropout ==> Hidden:128 w/ dropout ==> Output:10 (with Softmax activation)

model = LeNet.build(input_shape=INPUT_SHAPE, classes=NB_CLASSES)

model.summary()

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_1 (Conv2D) (None, 20, 28, 28) 520

_________________________________________________________________

activation_1 (Activation) (None, 20, 28, 28) 0

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 20, 14, 14) 0

_________________________________________________________________

conv2d_2 (Conv2D) (None, 50, 14, 14) 25050

_________________________________________________________________

activation_2 (Activation) (None, 50, 14, 14) 0

_________________________________________________________________

max_pooling2d_2 (MaxPooling2 (None, 50, 7, 7) 0

_________________________________________________________________

flatten_1 (Flatten) (None, 2450) 0

_________________________________________________________________

dense_1 (Dense) (None, 500) 1225500

_________________________________________________________________

activation_3 (Activation) (None, 500) 0

_________________________________________________________________

dense_2 (Dense) (None, 10) 5010

_________________________________________________________________

activation_4 (Activation) (None, 10) 0

=================================================================

Total params: 1,256,080

Trainable params: 1,256,080

Non-trainable params: 0

_________________________________________________________________

Step 8: Compile the model with categorical_crossentropy loss function, Adam optimizer, and accuracy metric

model.compile(loss='categorical_crossentropy',

optimizer=OPTIMIZER,

metrics=['accuracy'])

Step 9: Perform the training with 128 batch size, 250 epochs, and 20 % of the train data used for validation

history = model.fit(X_train, Y_train,

batch_size=BATCH_SIZE, epochs=NB_EPOCH,

verbose=VERBOSE, validation_split=VALIDATION_SPLIT)

Train on 48000 samples, validate on 12000 samples

Epoch 1/250

48000/48000 [==============================] - 6s - loss: 0.0030 - acc: 0.9990 - val_loss: 0.0417 - val_acc: 0.9908

Epoch 2/250

48000/48000 [==============================] - 6s - loss: 0.0021 - acc: 0.9994 - val_loss: 0.0736 - val_acc: 0.9873

Epoch 3/250

48000/48000 [==============================] - 6s - loss: 0.0018 - acc: 0.9995 - val_loss: 0.0454 - val_acc: 0.9916

Epoch 4/250

48000/48000 [==============================] - 6s - loss: 0.0043 - acc: 0.9987 - val_loss: 0.0511 - val_acc: 0.9900

Epoch 5/250

48000/48000 [==============================] - 6s - loss: 0.0033 - acc: 0.9989 - val_loss: 0.0636 - val_acc: 0.9893

Epoch 6/250

48000/48000 [==============================] - 6s - loss: 0.0040 - acc: 0.9988 - val_loss: 0.0474 - val_acc: 0.9910

Epoch 7/250

48000/48000 [==============================] - 6s - loss: 9.1074e-04 - acc: 0.9998 - val_loss: 0.0420 - val_acc: 0.9930

Epoch 8/250

48000/48000 [==============================] - 6s - loss: 3.2303e-05 - acc: 1.0000 - val_loss: 0.0431 - val_acc: 0.9927

Epoch 9/250

48000/48000 [==============================] - 6s - loss: 8.9252e-06 - acc: 1.0000 - val_loss: 0.0429 - val_acc: 0.9934

Epoch 10/250

48000/48000 [==============================] - 6s - loss: 6.4166e-06 - acc: 1.0000 - val_loss: 0.0432 - val_acc: 0.9933

Epoch 11/250

48000/48000 [==============================] - 6s - loss: 4.9292e-06 - acc: 1.0000 - val_loss: 0.0436 - val_acc: 0.9935

Epoch 12/250

48000/48000 [==============================] - 6s - loss: 3.9686e-06 - acc: 1.0000 - val_loss: 0.0439 - val_acc: 0.9935

Epoch 13/250

48000/48000 [==============================] - 6s - loss: 3.2273e-06 - acc: 1.0000 - val_loss: 0.0442 - val_acc: 0.9935

Epoch 14/250

48000/48000 [==============================] - 6s - loss: 2.6348e-06 - acc: 1.0000 - val_loss: 0.0445 - val_acc: 0.9935

Epoch 15/250

48000/48000 [==============================] - 6s - loss: 2.1793e-06 - acc: 1.0000 - val_loss: 0.0449 - val_acc: 0.9935

Epoch 16/250

48000/48000 [==============================] - 6s - loss: 1.8254e-06 - acc: 1.0000 - val_loss: 0.0452 - val_acc: 0.9936

Epoch 17/250

48000/48000 [==============================] - 6s - loss: 1.5246e-06 - acc: 1.0000 - val_loss: 0.0456 - val_acc: 0.9936

Epoch 18/250

48000/48000 [==============================] - 6s - loss: 1.2681e-06 - acc: 1.0000 - val_loss: 0.0459 - val_acc: 0.9936

Epoch 19/250

48000/48000 [==============================] - 6s - loss: 1.0713e-06 - acc: 1.0000 - val_loss: 0.0463 - val_acc: 0.9935

Epoch 20/250

48000/48000 [==============================] - 6s - loss: 8.8055e-07 - acc: 1.0000 - val_loss: 0.0465 - val_acc: 0.9936

Epoch 21/250

48000/48000 [==============================] - 6s - loss: 7.7420e-07 - acc: 1.0000 - val_loss: 0.0469 - val_acc: 0.9934

Epoch 22/250

48000/48000 [==============================] - 6s - loss: 6.4383e-07 - acc: 1.0000 - val_loss: 0.0472 - val_acc: 0.9934

Epoch 23/250

48000/48000 [==============================] - 6s - loss: 5.4621e-07 - acc: 1.0000 - val_loss: 0.0476 - val_acc: 0.9934

Epoch 24/250

48000/48000 [==============================] - 6s - loss: 4.7299e-07 - acc: 1.0000 - val_loss: 0.0479 - val_acc: 0.9934

Epoch 25/250

48000/48000 [==============================] - 6s - loss: 4.1923e-07 - acc: 1.0000 - val_loss: 0.0482 - val_acc: 0.9934

Epoch 26/250

48000/48000 [==============================] - 6s - loss: 3.6452e-07 - acc: 1.0000 - val_loss: 0.0485 - val_acc: 0.9934

Epoch 27/250

48000/48000 [==============================] - 6s - loss: 3.1688e-07 - acc: 1.0000 - val_loss: 0.0487 - val_acc: 0.9935

Epoch 28/250

48000/48000 [==============================] - 6s - loss: 2.8225e-07 - acc: 1.0000 - val_loss: 0.0490 - val_acc: 0.9934

Epoch 29/250

48000/48000 [==============================] - 6s - loss: 2.5075e-07 - acc: 1.0000 - val_loss: 0.0493 - val_acc: 0.9933

Epoch 30/250

48000/48000 [==============================] - 6s - loss: 2.2580e-07 - acc: 1.0000 - val_loss: 0.0496 - val_acc: 0.9933

Epoch 31/250

48000/48000 [==============================] - 6s - loss: 2.0984e-07 - acc: 1.0000 - val_loss: 0.0499 - val_acc: 0.9933

Epoch 32/250

48000/48000 [==============================] - 6s - loss: 1.9287e-07 - acc: 1.0000 - val_loss: 0.0501 - val_acc: 0.9933

Epoch 33/250

48000/48000 [==============================] - 6s - loss: 1.7815e-07 - acc: 1.0000 - val_loss: 0.0505 - val_acc: 0.9934

Epoch 34/250

48000/48000 [==============================] - 6s - loss: 1.6738e-07 - acc: 1.0000 - val_loss: 0.0507 - val_acc: 0.9933

Epoch 35/250

48000/48000 [==============================] - 6s - loss: 1.5871e-07 - acc: 1.0000 - val_loss: 0.0510 - val_acc: 0.9934

Epoch 36/250

48000/48000 [==============================] - 6s - loss: 1.5120e-07 - acc: 1.0000 - val_loss: 0.0514 - val_acc: 0.9933

Epoch 37/250

48000/48000 [==============================] - 6s - loss: 1.4508e-07 - acc: 1.0000 - val_loss: 0.0517 - val_acc: 0.9933

Epoch 38/250

48000/48000 [==============================] - 6s - loss: 1.3985e-07 - acc: 1.0000 - val_loss: 0.0518 - val_acc: 0.9934

Epoch 39/250

48000/48000 [==============================] - 6s - loss: 1.3628e-07 - acc: 1.0000 - val_loss: 0.0522 - val_acc: 0.9933

Epoch 40/250

48000/48000 [==============================] - 6s - loss: 1.3297e-07 - acc: 1.0000 - val_loss: 0.0525 - val_acc: 0.9933

Epoch 41/250

48000/48000 [==============================] - 6s - loss: 1.3011e-07 - acc: 1.0000 - val_loss: 0.0527 - val_acc: 0.9933

Epoch 42/250

48000/48000 [==============================] - 6s - loss: 1.2796e-07 - acc: 1.0000 - val_loss: 0.0531 - val_acc: 0.9933

Epoch 43/250

48000/48000 [==============================] - 6s - loss: 1.2638e-07 - acc: 1.0000 - val_loss: 0.0533 - val_acc: 0.9933

Epoch 44/250

48000/48000 [==============================] - 6s - loss: 1.2478e-07 - acc: 1.0000 - val_loss: 0.0536 - val_acc: 0.9933

Epoch 45/250

48000/48000 [==============================] - 6s - loss: 1.2356e-07 - acc: 1.0000 - val_loss: 0.0540 - val_acc: 0.9934

Epoch 46/250

48000/48000 [==============================] - 6s - loss: 1.2264e-07 - acc: 1.0000 - val_loss: 0.0543 - val_acc: 0.9934

Epoch 47/250

48000/48000 [==============================] - 6s - loss: 1.2195e-07 - acc: 1.0000 - val_loss: 0.0544 - val_acc: 0.9933

Epoch 48/250

48000/48000 [==============================] - 6s - loss: 1.2130e-07 - acc: 1.0000 - val_loss: 0.0548 - val_acc: 0.9933

Epoch 49/250

48000/48000 [==============================] - 6s - loss: 1.2081e-07 - acc: 1.0000 - val_loss: 0.0552 - val_acc: 0.9933

Epoch 50/250

48000/48000 [==============================] - 6s - loss: 1.2042e-07 - acc: 1.0000 - val_loss: 0.0554 - val_acc: 0.9933

Epoch 51/250

48000/48000 [==============================] - 6s - loss: 1.2011e-07 - acc: 1.0000 - val_loss: 0.0557 - val_acc: 0.9934

Epoch 52/250

48000/48000 [==============================] - 6s - loss: 1.1991e-07 - acc: 1.0000 - val_loss: 0.0559 - val_acc: 0.9933

Epoch 53/250

48000/48000 [==============================] - 6s - loss: 1.1971e-07 - acc: 1.0000 - val_loss: 0.0561 - val_acc: 0.9933

Epoch 54/250

48000/48000 [==============================] - 6s - loss: 1.1958e-07 - acc: 1.0000 - val_loss: 0.0562 - val_acc: 0.9933

Epoch 55/250

48000/48000 [==============================] - 6s - loss: 1.1946e-07 - acc: 1.0000 - val_loss: 0.0564 - val_acc: 0.9933

Epoch 56/250

48000/48000 [==============================] - 6s - loss: 1.1939e-07 - acc: 1.0000 - val_loss: 0.0567 - val_acc: 0.9933

Epoch 57/250

48000/48000 [==============================] - 6s - loss: 1.1933e-07 - acc: 1.0000 - val_loss: 0.0568 - val_acc: 0.9933

Epoch 58/250

48000/48000 [==============================] - 6s - loss: 1.1930e-07 - acc: 1.0000 - val_loss: 0.0571 - val_acc: 0.9933

Epoch 59/250

48000/48000 [==============================] - 6s - loss: 1.1927e-07 - acc: 1.0000 - val_loss: 0.0573 - val_acc: 0.9933

Epoch 60/250

48000/48000 [==============================] - 6s - loss: 1.1925e-07 - acc: 1.0000 - val_loss: 0.0574 - val_acc: 0.9933

Epoch 61/250

48000/48000 [==============================] - 6s - loss: 1.1924e-07 - acc: 1.0000 - val_loss: 0.0574 - val_acc: 0.9933

Epoch 62/250

48000/48000 [==============================] - 6s - loss: 1.1924e-07 - acc: 1.0000 - val_loss: 0.0577 - val_acc: 0.9934

Epoch 63/250

48000/48000 [==============================] - 6s - loss: 1.1922e-07 - acc: 1.0000 - val_loss: 0.0577 - val_acc: 0.9933

Epoch 64/250

48000/48000 [==============================] - 6s - loss: 1.1922e-07 - acc: 1.0000 - val_loss: 0.0579 - val_acc: 0.9933

Epoch 65/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0580 - val_acc: 0.9933

Epoch 66/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0580 - val_acc: 0.9933

Epoch 67/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0580 - val_acc: 0.9933

Epoch 68/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0580 - val_acc: 0.9933

Epoch 69/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0581 - val_acc: 0.9933

Epoch 70/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0582 - val_acc: 0.9933

Epoch 71/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0582 - val_acc: 0.9933

Epoch 72/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0582 - val_acc: 0.9933

Epoch 73/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0581 - val_acc: 0.9933

Epoch 74/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0582 - val_acc: 0.9933

Epoch 75/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0582 - val_acc: 0.9933

Epoch 76/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0582 - val_acc: 0.9933

Epoch 77/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 78/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 79/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 80/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 81/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 82/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 83/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 84/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 85/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 86/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 87/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 88/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 89/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 90/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 91/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 92/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 93/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 94/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 95/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 96/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 97/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 98/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 99/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 100/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 101/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 102/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 103/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 104/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 105/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 106/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 107/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 108/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 109/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 110/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 111/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 112/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 113/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 114/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 115/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 116/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 117/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 118/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 119/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 120/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 121/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 122/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 123/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 124/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 125/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 126/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 127/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 128/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 129/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 130/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 131/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 132/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 133/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 134/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 135/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 136/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 137/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 138/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 139/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 140/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 141/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 142/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 143/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 144/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 145/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 146/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 147/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 148/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 149/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 150/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 151/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 152/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 153/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 154/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 155/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 156/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 157/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 158/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 159/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 160/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 161/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 162/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 163/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 164/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 165/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 166/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 167/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 168/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 169/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 170/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 171/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 172/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 173/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 174/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 175/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 176/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 177/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 178/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 179/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 180/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 181/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 182/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 183/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 184/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 185/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 186/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 187/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 188/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 189/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 190/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 191/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 192/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 193/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 194/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 195/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 196/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 197/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 198/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 199/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 200/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 201/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 202/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 203/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 204/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 205/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 206/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 207/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 208/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 209/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 210/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 211/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 212/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 213/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 214/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 215/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 216/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 217/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 218/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 219/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 220/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 221/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 222/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 223/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 224/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 225/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 226/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 227/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 228/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 229/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 230/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 231/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 232/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 233/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 234/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 235/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 236/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 237/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 238/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 239/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 240/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 241/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 242/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 243/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 244/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 245/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 246/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 247/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 248/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 249/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Epoch 250/250

48000/48000 [==============================] - 6s - loss: 1.1921e-07 - acc: 1.0000 - val_loss: 0.0584 - val_acc: 0.9933

Step 10: Evaluate the model on the test dataset (10,000 images)

score = model.evaluate(X_test, Y_test, verbose=VERBOSE)

print("\nTest score:", score[0])

print('Test accuracy:', score[1])

9824/10000 [============================>.] - ETA: 0s

Test score: 0.051760694336

Test accuracy: 0.993

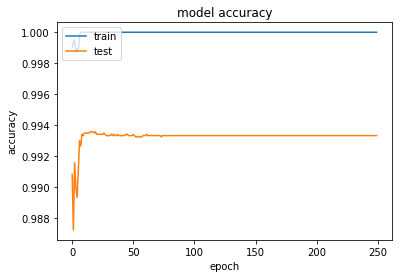

Step 11: Plot the accuracy from history

print(history.history.keys())

plt.plot(history.history['acc'])

plt.plot(history.history['val_acc'])

plt.title('model accuracy')

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.legend(['train', 'test'], loc='upper left')

plt.show()

dict_keys(['val_loss', 'val_acc', 'loss', 'acc'])

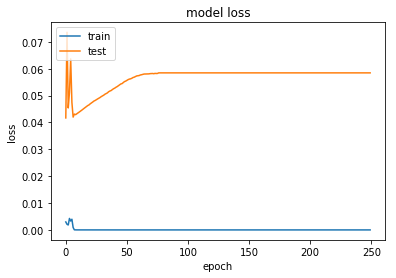

Step 12: Plot the loss from history

plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.title('model loss')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(['train', 'test'], loc='upper left')

plt.show()

[Optional] Step 13: Save the model (serialized) to JSON

model_json = model.to_json()

with open("model.json", "w") as json_file:

json_file.write(model_json)

%ls

Volume in drive C is Windows

Volume Serial Number is 4E4C-DF71

Directory of C:\Users\ECE\workspace

21/09/2017 10:47 AM <DIR> .

21/09/2017 10:47 AM <DIR> ..

21/09/2017 10:03 AM <DIR> .ipynb_checkpoints

22/07/2017 07:42 AM <DIR> appium-project

21/09/2017 10:03 AM 136,338 DeepLearningHelloWorldPart4.ipynb

21/09/2017 10:45 AM 139,720 DeepLearningHelloWorldPart5.ipynb

13/09/2017 08:10 PM <DIR> Deep-Learning-with-Keras

13/09/2017 05:03 PM <DIR> five-video-classification-methods

14/09/2017 06:51 AM <DIR> keras

21/09/2017 10:47 AM 3,146 model.json

3 File(s) 279,204 bytes

7 Dir(s) 63,157,465,088 bytes free

[Optional] Step 14: Save the model weights

model.save_weights("model.h5")

%ls

Volume in drive C is Windows

Volume Serial Number is 4E4C-DF71

Directory of C:\Users\ECE\workspace

21/09/2017 10:47 AM <DIR> .

21/09/2017 10:47 AM <DIR> ..

21/09/2017 10:03 AM <DIR> .ipynb_checkpoints

22/07/2017 07:42 AM <DIR> appium-project

21/09/2017 10:03 AM 136,338 DeepLearningHelloWorldPart4.ipynb

21/09/2017 10:47 AM 124,271 DeepLearningHelloWorldPart5.ipynb

13/09/2017 08:10 PM <DIR> Deep-Learning-with-Keras

13/09/2017 05:03 PM <DIR> five-video-classification-methods

14/09/2017 06:51 AM <DIR> keras

21/09/2017 10:47 AM 5,049,496 model.h5

21/09/2017 10:47 AM 3,146 model.json

4 File(s) 5,313,251 bytes

7 Dir(s) 63,152,427,008 bytes free

[Optional] Step 15: Load the saved model

json_file = open('model.json', 'r')

loaded_model_json = json_file.read()

json_file.close()

loaded_model = model_from_json(loaded_model_json)

loaded_model.load_weights("model.h5")

[Optional] Step 16: Compile and evaluate loaded model

loaded_model.compile(loss='categorical_crossentropy',

optimizer=OPTIMIZER,

metrics=['accuracy'])

score = loaded_model.evaluate(X_test, Y_test, verbose=VERBOSE)

print("\nTest score:", score[0])

print('Test accuracy:', score[1])

10000/10000 [==============================] - 149s

Test score: 0.051760694336

Test accuracy: 0.993

- mkc